Computation in which many calculations are carried out simultaneously.

A concurrent program needs to perform several possibly unrelated tasks at the same time. In contrast, a parallel program solves a single problem.

By definition, a concurrent program deals continuously with networking protocols, databases, and the like. A typical parallel program is likely to be more focused: it streams data in, crunches it for a while, then streams data back out.

There are many aspects to the parallel execution of a program: threads are created, execute on a processor, transfer data to and from remote processors, and synchronise with other threads. Managing all of these aspects on top of constructing a correct and efficient algorithm is what makes parallel programming so hard.

Events happen in both time and space. It is possible for two events to occur in the same place one after the other in time (ie. sequentially), and equally possible for events to occur in different places at the same time (ie. concurrently, or in parallel).

Divide & Conquer

To solve a large instance of a problem, break it into smaller instances of the same problem, and use the solutions of these to solve the original problem. The branching factor of a divide-and-conquer algorithm is the number of subproblems into which a problem is divided. A divide-and-conquer algorithm is balanced if it divides the initial problem into equally-sized subproblems.

Communicating Sequential Processes

CSP is a process algebra which is used to describe parallel programs. In this world, a program is a network of processes, which are connected using channels. A channel is a point-to-point, uni-directional, synchronous unbuffered comms link. Processes only need to be aware of the channels connecting them to other processes, and how to communicate on those channels (generally using the same protocol as the process on the other end).

Mutex

In its simplest form, a "binary semaphore" is a flag associated with a resource. Two operations act on semaphores: WAIT and SIGNAL. WAIT checks to see if the resource is available. If so, it is marked "unavailable"; if not, the CPU is released to other tasks until the resource becomes available. SIGNAL just marks the resource "available." A true mutex has a more specific use-case and definition, in that only the task that locked the mutex is supposed to unlock it.

Every task, before using a shared resource, does a WAIT on its semaphore, and after using the resource, does a SIGNAL. This is sufficient to ensure that only one task can use that resource at any time -- and yet even if one task is blocked, the other tasks can run normally.

Lock-free

Lock-free data structures are data structures that are thread and interrupt safe without having to use mutual exclusion mechanisms. Lock-free data structures are most useful for inter process communication, but due to the efficiency of lockfree, it can safely be used for single threaded uses as well, making it good for general purpose use.

However it is worthwhile to reflect on the contrast between the concurrent nature of the world, and the sequential nature of the digital computer. Since the main purpose of the computer is to model the world, there would seem to be a serious mismatch.

Multitasking

Round-robin means that each task takes its turn at the CPU, one at a time, in a fixed sequence like a big loop of tasks. Cooperative means that each task has the CPU as long as it wants, and releases the CPU only when it's ready.

- Parallelism and concurrency need different tools

- Concurrent and multicore programming, Haskell

- Forth multitasking in a nutshell

- The Design of Parallel Programming Languages, Richard P. Gabriel

Interaction nets are a graphical model of computation.

Interaction nets can capture all computable functions with rewriting rules, no external machinery such as copying a chunk of memory, or a garbage collector, is needed. Unlike models such as Turing machines, Lambda calculus, cellular automata, or combinators, an interaction net computational step can be defined as a constant time operation, and the model allows for parallelism in which many steps can take place at the same time.

1. Agents

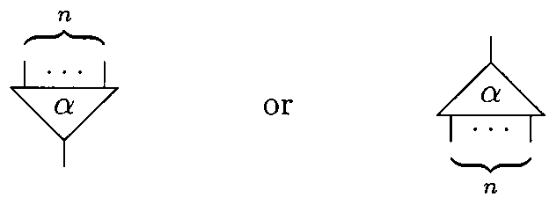

An agent(a) is a cell that has one principal port and a number of auxiliary ports(n). A pair of agents connected together on their principal ports is called an active pair. Graphically, principal ports are distinguished by arrows.

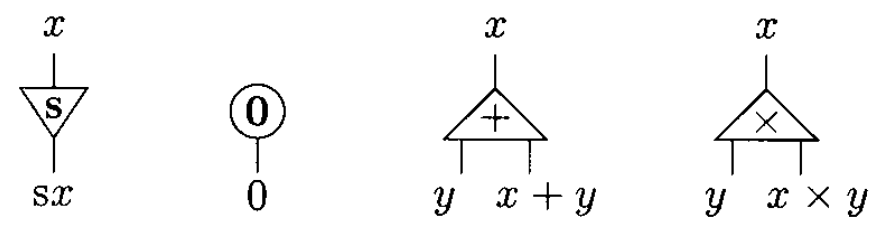

The examples on this page will make use of four agents: Successor(increments a natural number), Zero, Add & Mul.

2. Interaction Nets

A net is an undirected graph of agents where each port is connected to another one by means of a wire. The following net has three free ports, x, y, and z. Note that a wire may connect two ports of the same agent. A rewriting of a net is performed only on an active pair according to an interaction rule.

3. Rewriting Rules

Here, rewriting is just a convenient word to express a very concrete notion of interaction, which we shall make precise by requiring some properties of rules:

- Agents interact only through their principal port.

- Each variable in a rule occurs exactly twice, once on each side.

- There is at most one rule for each pair of distinct symbols.

In an agent definition, the first port is the principal port, the rest of the ports are listed in the order obtained by moving anticlockwise round the agent. The following definition follows the interaction net at the left side of the rule 2 figure.

Net: Add(u,y,z), S(u,x)

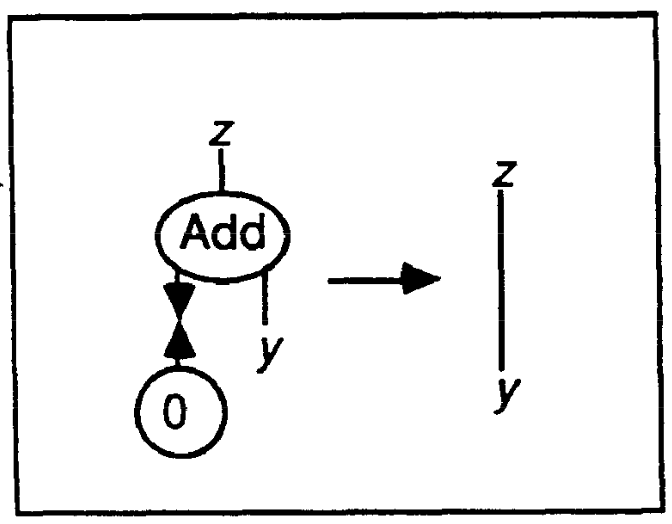

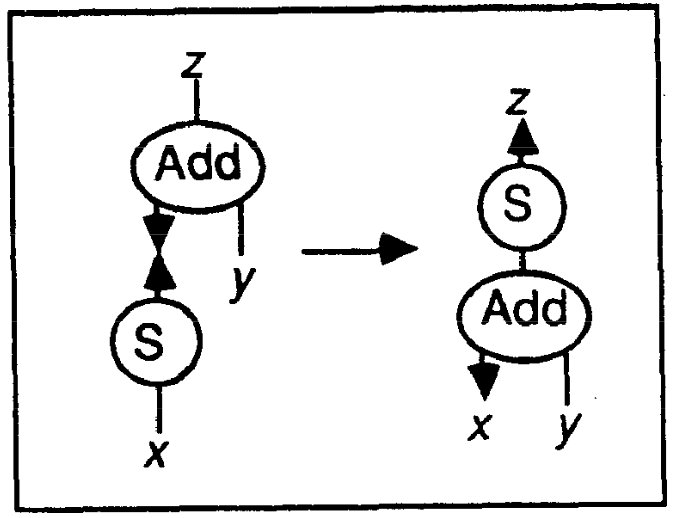

| Rule 1 | Rule 2 |

|---|---|

|

|

In the following notation, an interaction rule consists of a pair of net descriptions separated by an arrow. Agents are capitalized, and free ports are lowercase.

Rules: Add(u,y,z), Z(u) --> z-y Add(u,y,z), S(u,x) --> S(z,w), Add(x,y,w)

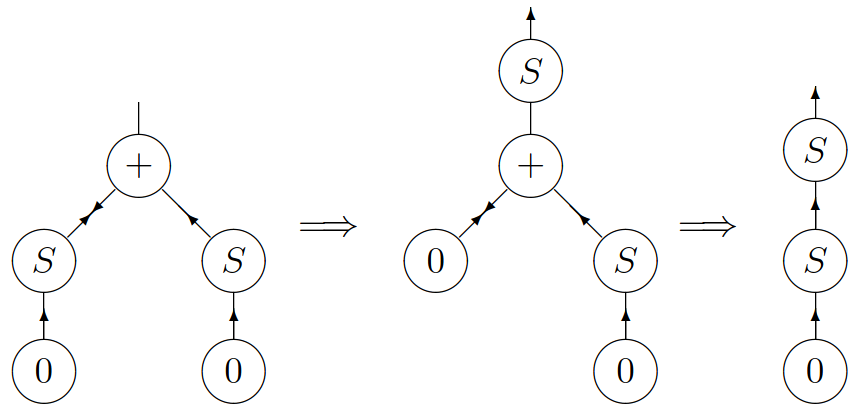

An interaction net to compute the result of 1 + 1 with the rules defined above, is shown below, where one active pair has been generated. We then show two reductions, which use the previous two rules. The final net, on the right-hand side, is of course the representation of 2, which is the expected answer.

Programming

From now on, we will use Inpla's notation for rules in which the principal ports are taken out of the brackets and their equivalent connection written as ><. When an agent has an arity of 0, the brackets are removed altogether. Thus, we can write the entire addition program as:

Rules: add(y, z) >< Z => y~z; add(y, z) >< S(x) => add(y, S(z))~x; Exec: add(res,S(Z))~S(S(Z)); 1 + 2 res; Result: S(S(S(Z))), or 3

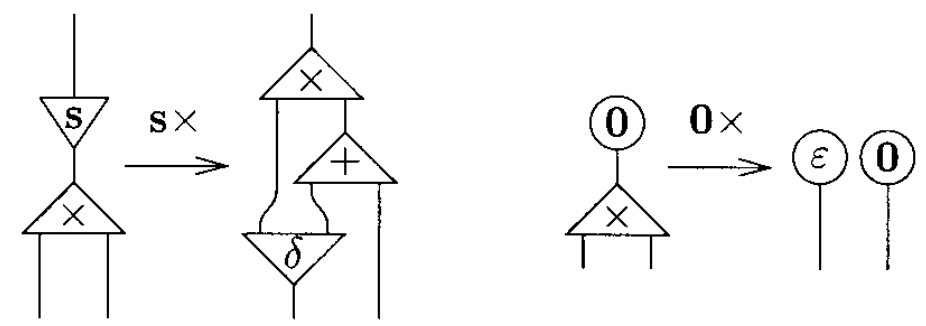

When defining multiplication, note that the argument y is used twice in the first equation, and it is not used at all in the second one. For that reason, two extra symbols are needed duplicate and erase.

sx * y = (x + y) + y 0 * y = 0

The idea is that a net representing a natural number should be duplicated when it is connected to the principal port of a duplicate, and it should be erased when it is connected to the principal port of an erase.

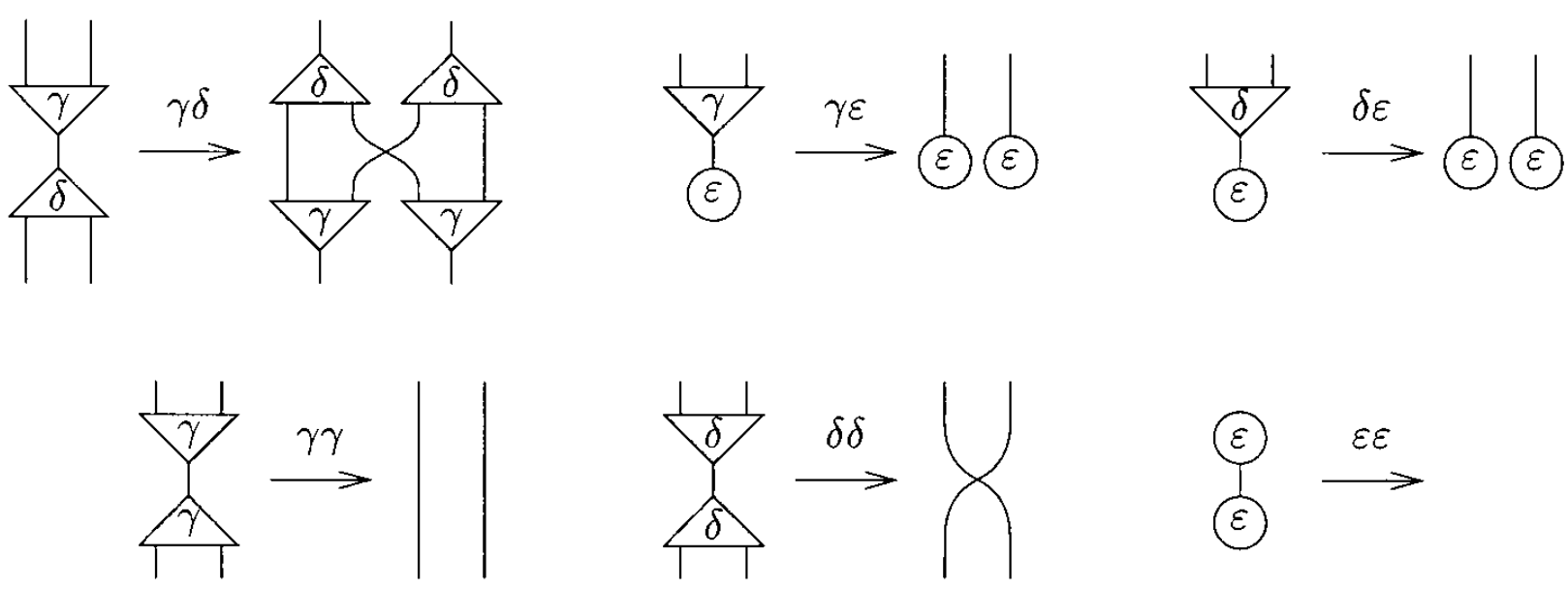

The system of interaction combinators consists of three symbols, called combinators: y(constructor), d(duplicator), and e(eraser). The six interaction rules below are of two kinds: commutation when the two cells carry different symbols (yd, ye, de) and annihilation when they carry the same symbol (yy, dd, ee).

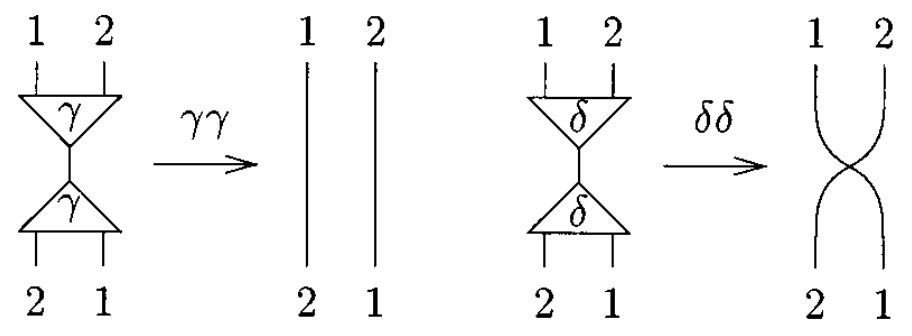

Note that the annihilations for y and d are not the same. Furthermore, if one numbers the auxiliary ports, one realizes that it is yy, not dd, which exchanges the ports:

The fundamental laws of computation are commutation and annihilation.

- Interaction Nets

- Interaction Combinators

- Implementation of a low-level language for interaction nets, Shinya Sato

- Inpla, Interaction Nets as Programming Language

- Towards a Programming Language for Interaction Nets, Ian Mackie

- An Implementation Model for Interaction Nets

- Interaction Nets Playground

- Bologna Optimal Higher-Order Machine

A cellular automaton is a collection of cells on a grid that evolves over time.

One way to simulate a two-dimensional cellular automaton is with an infinite sheet of graph paper along with a set of rules for the cells to follow.

In a cellular automaton, a Garden of Eden is a configuration that has no predecessor. It can be the initial configuration of the automaton but cannot arise in any other way. John Tukey named these configurations after the Garden of Eden in Abrahamic religions, which was created out of nowhere.

incoming interaction nets